It has been a couple of weeks since my last blog post, and that for a good reason (my second son arrived earlier this month).

I announced his arrival with some posts about some of his ancestors. One of the posts contained a video from the early 1950's.

There is a online tool (Jupyter/Python) that can colorize black/white photos and videos.

What It Does

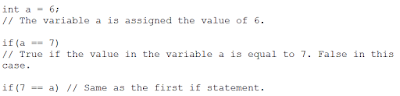

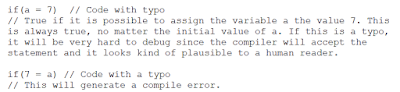

DeOldify is based on Generative Adversarial Networks, where two Neural Networks compete to each other. One Neural Network (generator) tries to produce realistic colorized pictures, and the other (discriminator) tries to tell whether pictures are authentic or colorized. The generator tries to fool the discriminator, and the discriminator tries to reveal the generator. When they work together, they will for example learn to create pictures that appear realistic to human beings.

DeOldify uses an extension of GAN that is called NoGAN. NoGAN eliminates some side effects of GAN for videos, for example flickering colors between frames.

You can find an interview with one of the inventors below.

How to Use:

Step 1: I uploaded the black/white video file to YouTube. I wanted it to be available for deOldify but I didn't want it to be searchable yet, so I used the unlisted option. With the unlisted option, one needs the direct link to the video in order to see it.

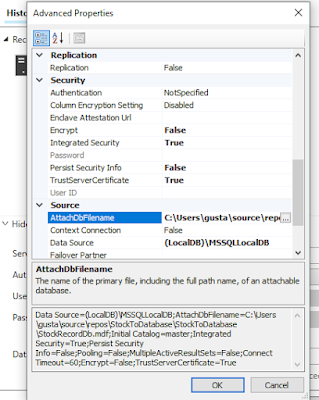

Step 2: Follow the steps in deOldify Colab. One can run it in the cloud, or on a local computer, if desired. Provide the link to the video in step 1 when it is time to run the Colorize! step.

Step 3: The colorization can process a couple of frames per second, so this will take some time. Once the colorization is done, download the video file to your computer.

My original video:

My Colorized Video:

I added a small watermark in the lower left side of the video to indicate that this video has been post processed.