He described his code to the duck, line by line, easy enough for a rubber duck to understand. After describing a couple of lines, he understood the issue himself.

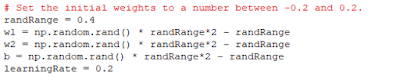

I'll try to do this for a simple neural network program that I have. It is based on a tutorial for a very simple neural network:

|

| The indata is weighted and summed up to generate a prediction. In the example |

|

| The length on the X axis and the width on the Y axis. The training data consists of blue flowers (0) and red flowers(1). The gray flowers are to be classified by the neural network. |

After that, I iterate over all known flowers and estimate the color using the weights. I sum up the errors as a metric of the progress of the neural network. The new weights and bias are adjusted by calculating some derivatives (slope of a cost function).

|

I ran the program several times for the same dataset but with different number of iterations over the training data.

| Parameters | Flower 0 | Flower 1 | Training Data | |||||

|---|---|---|---|---|---|---|---|---|

| Iterations | w1 | w2 | b | Prediction | Cost | Prediction | Cost | Cost |

| 0 (guess) | -0.134 | -0.033 | -0.253 | 0.381 | 0.145 | 0.291 | 0.502 | NaN |

| 1 | 0.109 | 0.142 | 0.165 | 0.616 | 0.379 | 0.690 | 0.096 | 2.51 |

| 10 | 0.345 | -0.334 | -0.954 | 0.317 | 0.100 | 0.566 | 0.188 | 1.742 |

| 100 | 0.900 | -0.530 | -3.126 | 0.091 | 8.228e-03 | 0.598 | 0.162 | 1.394 |

| 1 000 | 1.576 | -0.316 | -5.865 | 21.51e-03 | 462-5e-06 | 0.713 | 82.45e-03 | 1.316 |

| 10 000 | 2.250 | -0.102 | -8.392 | 5.948e-03 | 3.538e-06 | 0.836 | 26.78e-03 | 1.316 |

| 10 000 | 2.250 | -0.103 | -8.391 | 5.945e-03 | 3.536e-06 | 0.836 | 26.73e-03 | 1.316 |

| 10 000 | 2.251 | -0.106 | -8.390 | 5.945e-03 | 3.534e-06 | 0.837 | 26.66e-03 | 1.315 |

| 100 000 | 2.907 | 0.120 | -10.78 | 1.833e-03 | 3.360e-06 | 0.918 | 6.660e-03 | 1.239 |

| 1 000 000 | 3.547 | 0.373 | -13.12 | 0.595e-03 | 3.543e-06 | 0.961 | 1.491e-03 | 1.192 |

As expected, the first iteration is basically a guess. It takes a lot of iterations to get predictions that are close to the actual values. For flower 0 (Blue), it takes thousands of iterations and for flower 1 (red), it takes hundreds of thousands iterations.

I also ran a prediction on the same training data but with a test flower that had a sightly shorter blade. For that flower, it was much harder to predict for the neural network (it said 51% red). As one can see from the training data, the rightmost gray (unknown) flower is surrounded by red ones. However, it is still possible that the blue ones in the middle can reach out to that flower. For the leftmost gray flower, it is easier to predict where it belongs.

Iterating hundreds of thousands of times takes some time. I need to find better ways to estimate the new parameters, such as optimizations and built-in functions.

I see three factors that will make machine learning difficult:

- Machine learning takes a lot of computational resources.

- The data is often imperfect due to bad sensors, operator errors and other factors

- The world it self is often irregular with stochastic processes and unknown unknowns that will confuse the learning of neural networks.

Why code the algorithm myself instead of using any of the existing ones? The purpose of this experiment is to learn neural networks from the ground, not making cool predictions without understanding what I'm doing.

In the next blog post, I'll try to use some real world data to see if there can be any predictions of the outcome.

No comments:

Post a Comment