Use English when describing the data

Using another language is a very common mistake for non-English natives, especially if one believes that the data will have a very limited audience. Applications has a tendency to grow and involve more people.

Use standardized formats for input data

I've seen some strange data files for other applications, and it is a nightmare to decode them. Unless there are strong reasons to save storage space, I recommend using plain text files such as:

- csv - Compact for data. However, missing separators will shift all data to the left. This can be difficult to recover

- json - Quite compact for data, but slightly harder to implement. This way, it is clear what the different values represent.

- xml - This will generate some overhead and may be difficult to implement, unless there is some handy xml class that can handle the xml coding.

- Database - Feeding the scraped data directly into a database. This is a bit complex but has some advantages: It will indicate if there are errors, the data labeling is clear and it will be compact. One disadvantage is that it takes some time to set up the database.

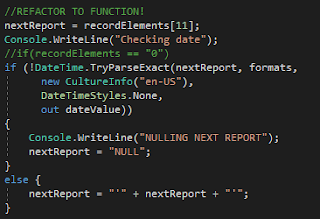

Handle bad or missing data directly

If there are flaws in the web scraper, or the web server, the data will be flawed. This will be a big problem if the web scraping continues - big chunks of data may be missing or erroneous.

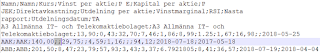

Test the data and the format

This will help finding flawed data early. Tests may check the number of data points, the type of data (integer/float/names) and how the data is changing over time.

Cross-verify numbers

It can be useful to scrape some extra data for cross-checks. For example, scraping Price, Earnings and Price/Earnings will make it easy to check that those values are valid.

Handle changes in the data

This is quite obvious. The web services that you want to scrape will change its interface once in a while and that will break your web scraper. As mentioned above, you need to see that immediately, otherwise you'll have tonnes of data that needs to be fixed.

Automate the web scraping

After all, the purpose of computers is to liberate people from manual labor. This applies to web scraping too. If it isn't started automatically, it is easy to forget it.

It is easy to set up the web scraping script using cron (Linux) or the Task Scheduler. I strongly recommend that you check that the tasks are really started as they should, at least the Task Scheduler isn't totally reliable.

Make the data database-friendly

It is very likely that you will send the data to a database sooner or later. Considering how to do that early will save you a lot of effort.

Side note:

One very common issue is missing yield values. That can span over several months of data. I've created a python script to resolve that issue by adding an empty piece of data for the missing piece of information.