As I've stressed several times before, this blog describes my learning curve in programming. If you find errors or areas where I have misunderstood the concepts that I explore, you are welcome to comment or contact me.

My Understanding on Stocks

In theory, it is very easy to tell the value of a stock. Sum upp all future dividends that the stock will generate and compensate for future inflation

et voilà! - you have the value of that stock. The problem is obviously that no one has that information. Instead, pricing and valuation of stocks is a subject of debate and drives all stock trade.

When a stock trade takes place. two actors has different ideas of the value of that stock: The seller thinks that the stock is so high that he/she prefers money instead of that stock. The buyer thinks that the same price of the same stock is so low that he/she prefers the stock instead of money.

The

Efficient Market Hypothesis is central in this subject. Put simply, it assumes that all relevant information about the stock is already reflected in the stock price. Based on that theory, it would be impossible to systematically outperform the stock market.

The Weak Efficient Market Hypothesis indicates that the stock/asset prices will be adjusted to the available information in the long run. However, there may be short-term biases that can be used to outperform the market, according to the theory.

Technical Analysis is another field in financial analysis that tries to predict future stock prices based on past stock prices. The opposite is

fundamental analysis that focuses on the company and how it is doing, competitors, assets, returns etc when predicting the stock price.

My personal hunch is that Technical Analysis is too much of magic for me and that the crowd is doing a better job than I am when evaluating stocks. I lean more to a form of efficient market hypothesis and I use low-cost index funds for my limited investments.

I consider my project more as an exercise in machine learning, time series analysis and correlation studies than a way to make money on stock-picking.

My Data

The data that I collect is:

- Name

- Name (again - a feature from the early versions of the web scraper)

- Price

- Earning per share

- Price per earning (redundant - can be used for checks)

- Capital per share

- Price per capital per share (redundant - can be used for checks)

- Returns per share

- Dividend (redundant - can be used for checks)

- Profit margin

- ROI

- Date for dividend - This data was not collected in the first years of web scraping

- Date for next report - This data was not collected in the first years of web scraping

|

| The data is separated by semicolons. |

|

| Some fields are empty. |

I collect the data from an online business newspaper using a web scraper. When dealing with real-world data, one brutal insight is that the data isn't always perfect:

- The stocks are sometimes splitted (one old stock is divided into several new stocks)

- The format of the data is changed on the target web page

- Data is sometimes missing. For example, the dividend is sometimes missing.

I will likely discover more issues with the data in future blog posts.

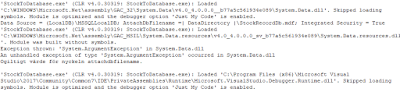

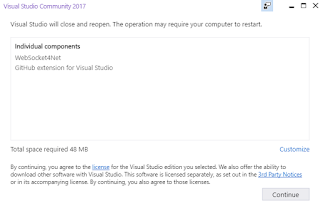

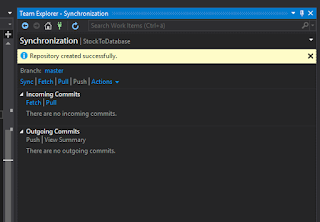

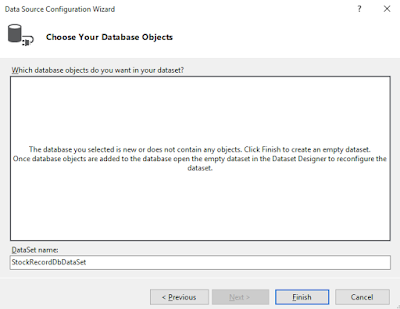

The next step is to create a Windows app in Visual Studio using C#