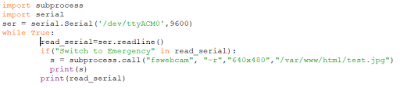

Previously, I was able to setup a web camera with an update interval of 5 seconds. Now, I want to stream video from the camera.

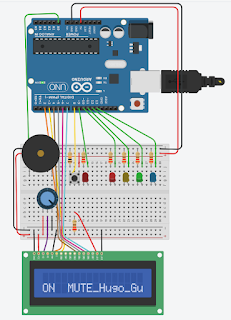

Option 1: Using a Script to Implement a Super Simple Web Server with Webcam:

I followed the tutorial and got a pretty good result. There is some lag in the video stream, but overall the experience is quite good.

The Python script implements:

- a small web server, which can make it hard to embed into a larger web site.

- a stream using the camera that is fed to the web site.

In this case, the web server and stream are on port 8000.

The drawback with this approach is that the web server is extremely simple and hard to integrate to other functionality. The other option is even simpler: Using YouTube to stream the video.

Option 2: Stream Video Over YouTube

Step 1 - Preparations

First, I need to activate live streaming online on Youtube:

|

| "Sänd live" translates to "Go live". |

There is a 24 hour delay to activate the "Go Live" functionality. For the mobile app, it seems that the "Go Live" feature is only available to accounts with more than 1000 viewers. I plan to stream from RPI using an encoder, so I hope that it will work anyways.

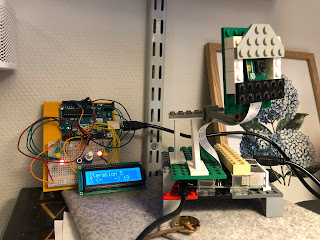

I expect streaming from a RPI to generate quite some heat, so I have removed the Lego case for my RPI as a precaution.

Step 2 - Setting Up Livestream and saving URL and key

Step 3 - Running ffmpg / raspivid command from RPI

I use this command:

raspivid -o - -t 0 -vf -hf -fps 30 -b 6000000 | ffmpeg -re -ar 44100 -ac 2 -acodec pcm_s16le -f s16le -ac 2 -i /dev/zero -f h264 -i - -vcodec copy -acodec aac -ab 128k -g 50 -strict experimental -f flv rtmp://a.rtmp.youtube.com/live2/<SESSION>

raspivid captures video from a Raspberry Pi Camera module. The different options are:

- -o - means that the output will be sent to stdout. Actually, it will be piped to ffmpeg.

- -vf and -hf means that the stream will be vertically flipped.

- -fps 30 means that the stream will capture 30 frames per second

- -b 6000000 means that the bit rate will be 6Mbit per second. It is maybe too much for the built in wifi adapter, so I may have to reduce the bitrate.

The output is piped to ffmpeg that is used to record, convert and stream video.

- -re means reading input at native frame rate.

- -ar 44100 sets the audio sampling frequency. The Raspberry Pi Camera module doesn't support audio so I should be able to skip this one.

- -ac 2 sets the number of audio channels to two. I should be able to skip this one too.

- -acodec pcm_s16le sets the audio codec. I should be able to skip this one too.

- -f s16le forces format, like signed, 16 bits and little endian.

- -i /dev/zero specifies input filename. This input provides a continuous stream of null characters. I don't know why that is specified to be the input.

- -f h264 forces the format to H.264, a video coding format used in mpeg-4

- -vcodec copy means that the raw codec data is copied as is.

- -acodec aac -specifies audio codec againg

- -ab 128k -sets the audio bitrate.

- -g 50 sets the "Group of Pictures" size to 50

- -strict experimental - specifies that the program doesn't need to be super-strict to the standards

- -f flv rtmp://a.rtmp.youtube.com/live2/<SESSION> forces the output to go to my Youtube stream

|

| The streaming key must be copied to the RPI CLI command. |

I had ffmpeg installed already, so I didn't need to recompile it. The first streaming attempt had the image flipped upside down. After removing the fv and hv flags, the stream was initiated properly.

It took a short while before the stream appeared on my Youtube channel.

This makes it much easier to access streams from my RPI. As long as I have the link to the stream, I can access it. I'll also be able to embed the stream into a html page.