This is done in a similar way as in linear regression. I calculate the partial derivatives of the cost function:

The Back Propagation Algorithm:

Given a training set:

Set deltas to zero for all layers, and their respective input and output nodes.

Repeat for all training examples:

Forward propagation:

Set the initial activation values to a(1) = x(i).

Calculate the activation values for all layers using forward propagation.

CONTINUE!!!

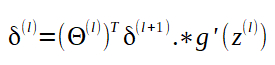

Now, calculate the errors by a(L)-y(i)

Delta is set to delta + the activation value miltiplied by the error.

No comments:

Post a Comment