I found an example that is using the sckit-learn package.

The Peer Project

This time, I've made some changes to the input data:

- I will make the neural network train for the actual training output data instead of a binary representation of that data.

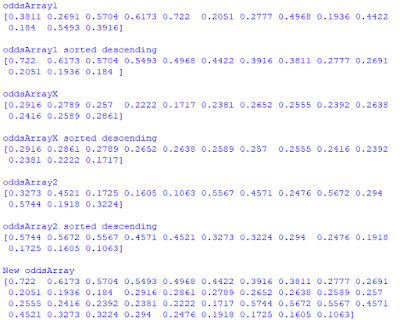

- I will sort the training input data in three groups of thirteen values:

|

| The values are initially arranged in triplets (likelihoods for events 1, X and 2) for thirteen samples. |

I sorted the array by descending values with a simple modification of the numpy sort command:

I use the MLPRegressor with some different random seeds and 10000 iterations.

The results are a bit disappointing. For some random seeds (and different sets of training/test data), the errors are smaller after 10000 iterations, compared to after one iteration.

|

| Seed 300, 10000 iterations. |

|

| Seed 400, 1 iteration |

|

| Seed 400, 10000 iterations |

|

| Seed 400, 1 iteration |

The error messages are reoccurring and indicates that the convergence is too weak. It seems not to be any easy-detectable link between inputs and the magnitude of the output.

Adding more iterations seems not to be the magical solution either.

|

| For 20 000 iterations, the error is smaller than for 10 000 iterations. But for 40 000 iterations, the error is increasing. |

It seems that it is very important to be able to interpret the neural networks!

Checking a Known Data Set

As a sanity check, I've been training the MLPRegressor on a small training set:

|

| This sample should be quite easy for an neural network to train. |

One iteration:

|

| The MLPRegressor wasn't able to converge after one sample. This makes sense, since it takes quite a number of iterations to converge. |

Ten iterations:

|

| I still see the warning about convergence, but the errors are smaller. This means that the algorithm is converging after all |

A Thousand Iterations:

| The error isn't shown anymore, and the errors are smaller now. |

A Million Iterations:

|

| Here, I'm running twice on the same setup. The first optimization converged much more poorly than the second one. The reason for this is probably an unfortunate selection of training data. |

The example above illustrates how important it is to have a big set of data to test and train on.

I'll finish this part of my Neural Network project for now. The next project will start in the next blog post and cover much more training data.

No comments:

Post a Comment